Fixed-length semi-lockless queues revisited

There were some great comments on my previous post, both in comments here and on the Google Plus post which points to it. I'd like to address the point here, now I've had a few moments to do follow-up work.

One anonymous commenter, as well as Stephen Hemminger via email, point to the existing lockless queue code in liburcu. I had actually waded through this before (I say waded, because it's wrapped in a few layers which I find annoying; there's a reason I write little CCAN modules). It's clever and genuinely lockless; my hat off to , but it only works in conjunction with RCU. In particular, it's an unlimited-length queue which uses a dummy element to avoid ever being empty, and the fact that it can safely traverse the '->next' entry even as an element is being dequeued, because the rules say you can't alter that field or free the object until later.

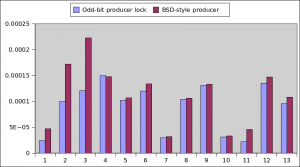

Stephen also pointed me to Kip Macy's buf_ring from FreeBSD; it uses two producer counters, prod_head and prod_tail. The consumer looks at prod_tail as usual, the producers compare and swap increment prod_head, then place their element, then wait for prod_tail to catch up with prod_head before incrementing prod_tail. Reimplementing this in my code showed it to be slower than the lower-bit-to-lock case for my benchmarks, though not much (the main difference is in the single-producer-using-muliple-producer-safe-routines, which are the first three benchmarks). I ignored the buf_ring consumer, which uses a similar two-counter scheme for consumers, which is only useful for debugging, and used the same consumer code as before.

Stephen also pointed me to Kip Macy's buf_ring from FreeBSD; it uses two producer counters, prod_head and prod_tail. The consumer looks at prod_tail as usual, the producers compare and swap increment prod_head, then place their element, then wait for prod_tail to catch up with prod_head before incrementing prod_tail. Reimplementing this in my code showed it to be slower than the lower-bit-to-lock case for my benchmarks, though not much (the main difference is in the single-producer-using-muliple-producer-safe-routines, which are the first three benchmarks). I ignored the buf_ring consumer, which uses a similar two-counter scheme for consumers, which is only useful for debugging, and used the same consumer code as before.

Arjen van de Ven makes several excellent points. Firstly, that transaction-style features may allow efficient lock-ellision in upcoming Intel CPUs (and, of course, PowerPC has announced transaction support for Power8), so we'll have to revisit in a few years when that reaches me.

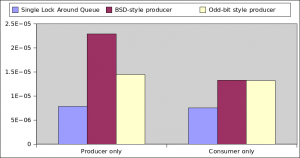

His more immediate point is thatuncontended locks are really cheap on recent CPUs; cheaper than cache-hot compare-and-swap operations. All the benchmarks I did involve everyone banging on the queue all the time, so I'm only measuring the contended cases. So I hacked my benchmarks to allow for "0 consumers" by having the producer discard all the queue contents every time it filled. Similarly, filling the queue with junk when it's empty for a "0 producers" benchmark.

Here we can see that the dumb, single lock comes into its own, being twice as fast as my optimal-when-contended version. If we just consider the common case of a single writer and a single reader, the lockless implementation takes 24ns in the contended case, and 14ns in the uncontended cases, whereas the naive locked implementation takes 107ns in the contended case and 7ns in the uncontended case. In other words, you'd have to be uncontended over 90% of the time to win. That can't happen in a naive implementation which wakes the consumer as soon as the first item has been inserted into the queue (and if you implement a batch version of queue_insert, the atomic exchange gets amortized, so it gets harder to beat).

Here we can see that the dumb, single lock comes into its own, being twice as fast as my optimal-when-contended version. If we just consider the common case of a single writer and a single reader, the lockless implementation takes 24ns in the contended case, and 14ns in the uncontended cases, whereas the naive locked implementation takes 107ns in the contended case and 7ns in the uncontended case. In other words, you'd have to be uncontended over 90% of the time to win. That can't happen in a naive implementation which wakes the consumer as soon as the first item has been inserted into the queue (and if you implement a batch version of queue_insert, the atomic exchange gets amortized, so it gets harder to beat).

For the moment, I'm sticking with the previous winner; there's still much to do to turn it into a usable API.