Fixed-length semi-lockless queues...

One of my vacation project was to look at a good queue implementation for ccan/antithread. I read a few papers, which mainly deal with generic link-list-style queues (I got quite excited about one before I realized that it needed a 128-bit compare-and-swap for 64 bit machines). I only really need a fixed-length queue of void *, so I set about implementing one.

You can find the cleaned-up version of my explorations on github. For my implementation I use a tail counter, 32 void * entries, and a head counter, like so:

#define QUEUE_ELEMS 32

struct queue {

unsigned int head;

unsigned int prod_waiting;

unsigned int lock;

void *elems[QUEUE_ELEMS];

unsigned int tail;

unsigned int cons_waiting;

};

The head and tail counters are free running to avoid the empty-or-full problem, and the prod_waiting and cons_waiting are for a future implementation which actually does sleep and wakeup (I spin for my current tests).

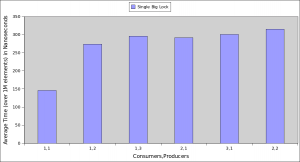

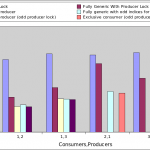

The simplest implementation is for both producers and consumers to grab the lock, do their work, then drop the lock. On my 32-bit x86 dual core 2 HT laptop, with 1 producer on cpu0 and 1 producer on cpu1 (ie. two hyperthreads of same core), it takes about 179 usec to enqueue and dequeue each element (but hugely variable, from 73 to 439 ns). You can see that (as expected) the 2 and 3 producers cases are quite expensive, though not so bad if there are 2 producers and 2 consumers.

The simplest implementation is for both producers and consumers to grab the lock, do their work, then drop the lock. On my 32-bit x86 dual core 2 HT laptop, with 1 producer on cpu0 and 1 producer on cpu1 (ie. two hyperthreads of same core), it takes about 179 usec to enqueue and dequeue each element (but hugely variable, from 73 to 439 ns). You can see that (as expected) the 2 and 3 producers cases are quite expensive, though not so bad if there are 2 producers and 2 consumers.

Lockless dequeue is quite easy:

- Read tail counter, then read head counter (order matters!)

- If it's empty, wait until head changes).

- Grab entry[tail % 32].

- Try to compare and swap the tail to tail+1. If not, we raced, so goto 1.

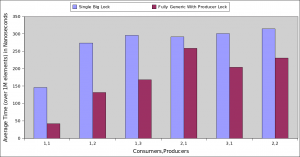

But lockless insert is harder, so I asked Paul McKenney who detailed a fairly complex scheme involving two offsets and some subtlety on both production and consumption side, and ended with "Now, are you -sure- locking is all that bad? ;-)". So I went for a big lock around insertion to begin with. It's generally a little better, particularly for the common case of a single consumer and a single producer.

But lockless insert is harder, so I asked Paul McKenney who detailed a fairly complex scheme involving two offsets and some subtlety on both production and consumption side, and ended with "Now, are you -sure- locking is all that bad? ;-)". So I went for a big lock around insertion to begin with. It's generally a little better, particularly for the common case of a single consumer and a single producer.

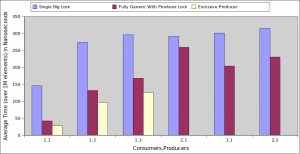

It's worth noting that if you know you're the only producer, you can skip the locks so I re-ran the benchmarks with a "queue_add_excl" implementation for the single-producer cases, as seen on the right.

It's worth noting that if you know you're the only producer, you can skip the locks so I re-ran the benchmarks with a "queue_add_excl" implementation for the single-producer cases, as seen on the right.

You can similarly simplify the single consumer case, though it makes little difference in my tests.

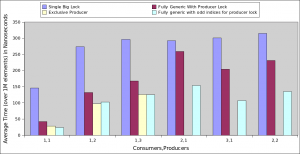

However, you can do better than a straight naive lock: you can use the lower bit of the head counter to exclude other producers. This means a production algorithm like so:

- Read head. If it's odd, wait.

- Read tail.

- If queue is full, wait for tail to change, then goto 1.

- Compare and swap head to head + 1; if it fails, go to 1.

- Store the element.

- Then increment the head.

For simplicity, I made the tail counter increment by 2 as well, and the consumer simply ignores the bottom bit of the head counter. Avoiding a separate atomic operation on a "prod_lock" word seems to pay off quite well.

For simplicity, I made the tail counter increment by 2 as well, and the consumer simply ignores the bottom bit of the head counter. Avoiding a separate atomic operation on a "prod_lock" word seems to pay off quite well.

Finally, it's worth noting that neither the exclusive producer nor exclusive consumer cases win much any more, so I can delete those altogether.

Finally, it's worth noting that neither the exclusive producer nor exclusive consumer cases win much any more, so I can delete those altogether.

Before tying this into antithread, there are several things to do:

- Re-audit to make sure the barriers are correct.

- Test on PowerPC (always good for finding missing barriers).

- Add in a decent notification mechanism, ie. futexes or falling back to pipes.

And that's next...